Small language models (SLMs): A beginner's guide

For enterprises in search of the right AI model, bigger isn't always better. In fact, many are finding that small language models (SLMs) tailored to specific tasks and workflows are more effective and efficient than general-purpose large language models (LLMs).

SLMs have more compact architecture, fewer parameters, and are trained on smaller, more focused datasets than their larger counterparts. As a result, they're less expensive to train, maintain, and require fewer computational resources than LLMs—all without sacrificing performance.

This article explores the benefits, use cases, and real-world examples of small language models. Read on to learn why SLMs are increasingly favored over LLMs when accuracy, efficiency, and cost-effectiveness are top priorities.

Harness proactive AI customer support

What are small language models?

Small language models are compact AI models designed for specific natural language tasks. Basically a smaller version of an LLM, they're designed to be more efficient, performative, and cost-effective than large models at natural language understanding, reasoning, math, and code generation.

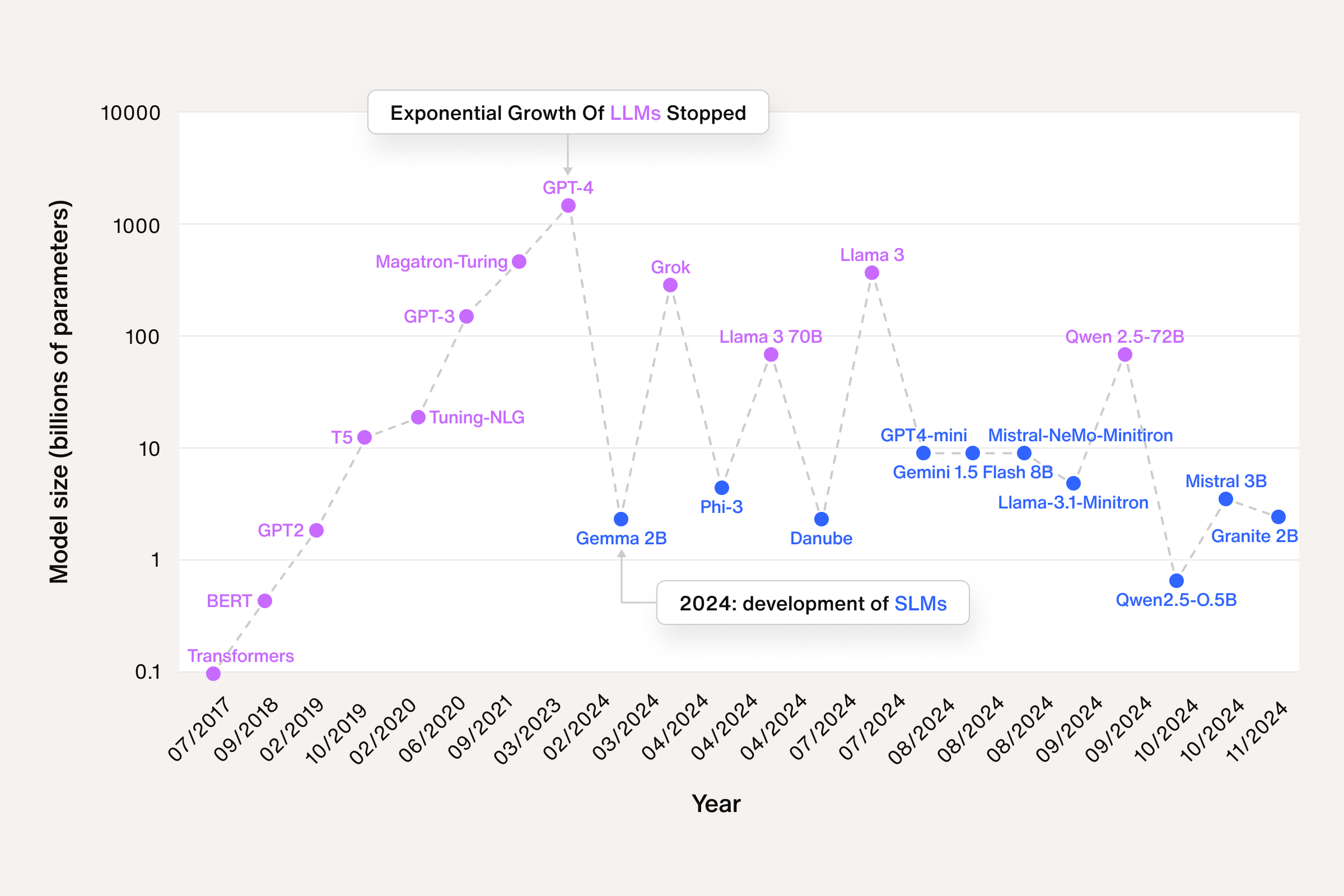

As the name suggests, SLMs are smaller in scope and scale than large models. They have significantly fewer parameters, or the internal variables that models learn during training and drive their performance. Whereas large models like ChatGPT-4 have trillions of parameters, small models have parameter counts that range from millions to billions.

This difference in size translates to numerous advantages:

Benefits of small language models

1. Efficiency

SLMs require less computational resources and memory, making them faster to train and deploy. Unlike LLMs that require graphics processing units (GPUs), small models can run on standard hardware or even mobile devices. This makes them suitable for scenarios with limited computational resources like edge computing IoT devices or even on-device AI agents.

2. Customization

SLMs are easier to fine-tune to specific tasks or business domains, and so excel in targeted use cases. For example, an SLM tailored to AI customer service can better navigate the nuances of customer interactions, product details, and company policy than a generic LLM, providing more accurate and relevant outputs that align with specific regulatory requirements.

3. Cost-effectiveness

Due to their smaller size, SMLs are cheaper to train, deploy, and maintain. For instance, small models can typically be trained and fine-tuned on a single GPU. These reduced system requirements directly translate to lower computational and financial costs.

4. Enhanced privacy and security

SLMs can be deployed locally or in private cloud environments, ensuring sensitive data remains under organizational control. This potential for greater security and privacy makes them suitable for highly regulated industries such as finance and healthcare.

5. Lower latency

Fewer parameters translate to faster processing times, making SLMs ideal for real-time applications, such as financial trading, real-time data analysis, or AI customer service.

6. Accessibility

With their lower resource requirements, SLMs are a viable option for smaller enterprises or even certain teams in a larger organization. Without having to invest in multiple GPUs and advanced hardware, organizations can adopt tailored AI solutions without the burden of an LLM.

Overall, SLMs offer a balance of domain-specific performance and computational efficiency. Designed to perform specific language tasks with efficiency and precision, they're a viable option for enterprises focused on real-world applications instead of brute computational power.

Leverage omnichannel AI for customer support

Small language models vs large language models: Key differences

As you consider which might serve the needs of your organization best, here’s a comparison table to recap the differences:

SLMs and LLMs can be combined within orchestrated AI systems as part of hybrid AI models. A hybrid approach uses intelligent routing techniques to efficiently distribute AI workloads among models, assigning the specific, simple tasks to SLMs and more complex, general-purpose tasks to LLMs.

Small language models vs domain-specific LLMs

SLMs are more compact and tailored than LLMs, but they lack deep specialization due to their smaller size. This is where domain-specific LLMs come in. These are highly tailored for specific industries or business domains, and so provide greater accuracy, but at a higher cost for training, maintenance, and computation than SLMs.

For example, in healthcare, domain-specific LLMs serve as the core of vertical AI agents that specialize in targeted use cases like medical diagnostics or patient data summaries. These agents are fine-tuned using only the most relevant datasets, such as healthcare literature, medical journals, patient records, and organizational compliance protocols. This niche training equips the vertical LLM agent with the deep domain intelligence needed to produce highly accurate, relevant outputs, without the interference of generic training data of a general-purpose LLM.

Often, domain-specific LLMs begin as small language models that get expanded and refined with LLM fine-tuning techniques.

Micro language models for AI customer service

Another application of small language models are micro language models (micro LLMs). These focus on even narrower, highly specific datasets to produce outputs of unmatched granularity and precision in environments where computational power and memory are limited.

For example, a micro LLM tailored to AI customer service could be fine-tuned to understand the nuances of customer interactions, product details, and company policies. This enables the autonomous AI concierge to provide accurate and relevant responses to customer inquiries, thereby increasing response times and customer satisfaction while minimizing concerns about accuracy, data privacy, and compliance.

"What we're starting to see is not a shift from large to small, but a shift from a singular category of models to a portfolio of models where customers get the ability to make a decision on what is the best model for their scenario."

Sonali Yadav, Principal Product Manager for Gen AI at Microsoft

How do small language models work?

LLMs serve as the foundation for SMLs. Like large models, SMLs are pre-trained on large datasets, and use a neural network transformer-based architecture to process and generate natural language content.

Unlike large models, though, SLMs often employ specially designed architecture that can handle longer sequences with less computational cost, optimizing both performance and efficiency. They’re trained on smaller, more specialized datasets which enables them to perform better on specific tasks.

SLMs also employ a variety of training techniques to achieve their smaller size and greater efficiency. These model compression techniques condense the broad understanding of LLMs into a more tailored, domain-specific intelligence, without compromising the capabilities to process, interpret, and generate natural language.

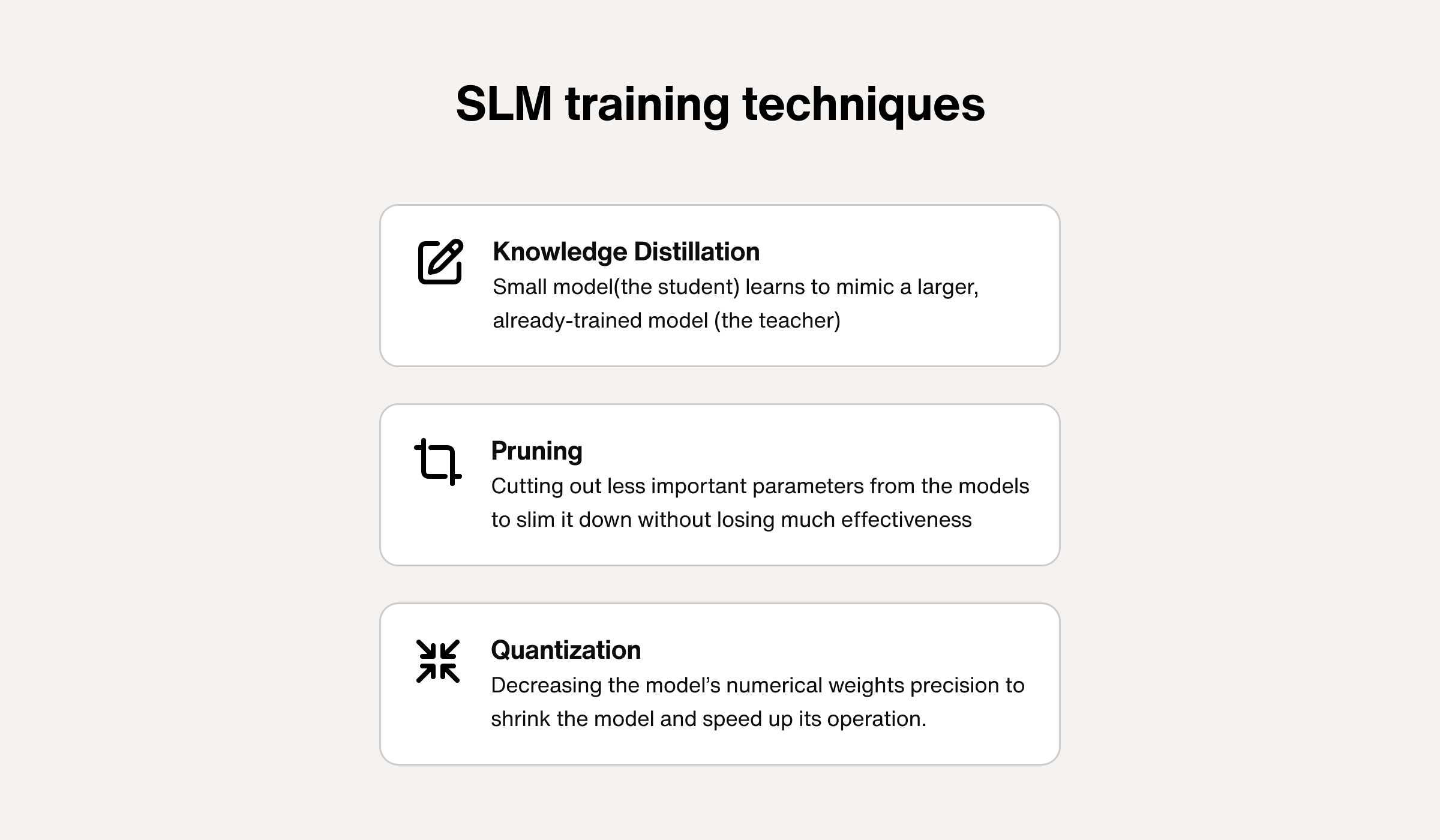

SLM training techniques include:

Knowledge distillation: A smaller model learns from a larger, more complex model (LLM) by mimicking its outputs, effectively transferring key knowledge and capabilities to the SLM without the full complexity.

Pruning and quantization: These model compression techniques remove unnecessary connections from the model (pruning) and lowers the precision of its weights (quantization) to further reduce its size, complexity, and resource requirements.

Transfer learning: This machine learning technique reuses a model trained for one task to improve its performance on a related task. For example, an image recognition model trained to recognize dogs can easily learn to recognize cats.

Use cases for small language models

Organizations can customize SLMs to their specific needs by fine-tuning them on domain-specific datasets. This adaptability means SLMs can be tailored to a variety of real-world applications that require domain-specific knowledge, jargon, or strict regulatory compliance, such as:

Language translation: Many SLMs are multilingual in addition to being context-aware, enabling them to translate between languages quickly while retaining the meaning and nuance of the original text.

Sentiment analysis: Capable of categorizing huge volumes of text, SLMs can analyze text and the sentiment behind it, making them useful for evaluating customer feedback, improving products, or adjusting marketing strategies.

Content summarization: Small models like Llama 3B or Gemini Nano can summarize audio recordings or conversation transcripts then automatically create action items like calendar events or meeting notes.

Customer service automation: SLMs can power chatbots, AI concierges, and other AI for customer service tools that handle routine interactions to enhance operational efficiency and customer experience.

Content and code generation: SLMs are ideal for completing and generating text as well software code. For instance, OpenAI Codex can generate, explain, and translate code from a natural language AI prompt.

Digital assistants and copilots: SLMs can enhance productivity and task performance when implemented in virtual assistants or chatbots. For example, GitHub CoPilot uses OpenAI Codex for its NLP and code generation capabilities.

Predictive maintenance: Manufacturers can deploy small models directly on local edge devices like sensors or IoT devices, treating the SLM as a tool that gathers, analyzes, and integrates data to predict maintenance needs in real-time.

Automate customer service with AI agents

Small language model examples

Small language models first emerged in the 2010s and gained prominence in 2023 with Google’s TinyBERT. They’re seeing increasing development among tech leaders and researchers as they gain traction among businesses due to their advantages in efficiency, cost, and customizability.

Here are some of the most powerful and popular examples of small language models:

DistilBERT: A lighter version of Google’s foundation model, BERT, that uses knowledge distillation to make it 40% smaller and 60% faster than its predecessor, while retaining 97% of BERT’s original capabilities for natural language understanding. View on Huggingface.

Gemma: A family of lightweight, small models derived from the Google Gemini LLMs. Deployable on laptops or mobile phones, the Gemma SLMs are well-suited for text generation, summarization, question answering, and coding tasks. Available in 2, 7, or 9 billion parameter sizes. View on Huggingface.

Qwen2: A family of small language models with sizes ranging from 0.5 billion to 7 billion parameters, each suited to different tasks. For instance, a mobile app would require the 0.5 billion super lightweight model, while the 7B version would offer the required performance for tasks like text generation or summarization. View on Huggingface.

Llama 3.1 8B: With 8 billion parameters, this small open-source AI model offers a balance of power and efficiency and is ideal for tasks like sentiment analysis and answering questions. View on Huggingface.

Phi-3.5: A lightweight, open-source model from Microsoft that excels in reason, multilingual processing, code generation, and image comprehension, even when compared to LLMs with far more parameters. View on Huggingface.

Mistral Nemo 12B: With 12 billion parameters, this small open-source model is ideal for complex NLP tasks like real-time dialogue systems or language translation. It can also run locally with minimal infrastructure. View on Huggingface.

Mixtral: This SLM from Mistral AI uses a "mixture of experts" architecture, using only 12.9 of its 46.7 billion parameters for each task. This makes it highly efficient and accurate compared to larger dense models. View on Huggingface.

Delight customers with AI customer service

Limitations of small language models

While small language models punch above their weight, they’re not without their limitations, or exempt from the typical risks of AI. These specific limitations include:

1. Limited scope and generalization

Since they’re trained on more specific datasets, SLMs often lack the ability to generalize across diverse topics or domains, which can lead to hallucinations (where AI posits an error as truth). Their narrower focus can also restrict their ability to adapt to novel tasks without additional fine-tuning.

2. Limited capacity for complex language

Due to their smaller parameter count and domain specialization, SLMs can struggle with nuanced language comprehension, contextual subtleties, or intricate linguistic patterns (unless trained for such). This makes them potentially less effective for sophisticated tasks than larger models, and can also impact the quality of their text generation outputs.

3. High-quality data dependance

SLMs require high-quality, curated datasets to operate effectively. Without this, the quality of their outputs suffers due to a lack of capacity to make sense of incomplete or noisy data.

Boost CSAT with proactive AI customer service

Getting started with small language models

As enterprises work to find the best and least expensive AI model for their unique needs, small language models have emerged as a welcome alternative to LLMs. Not only are SLMs more efficient, customizable, and cost-effective than LLMs, they provide the accuracy, security, and data controls required to safely deploy AI at scale. As enterprises try to right-size their models, SLMs show that less can be more.

If you're ready to explore small language models for AI customer service, Sendbird can help. Our robust AI agent platform makes it easy to build AI agents on any model with a foundation of enterprise-grade infrastructure that offers the scalability, security, and adaptability required for any environment or application. To learn more, contact our team of AI experts.

If you want go deeper, you might enjoy these related topics about AI: